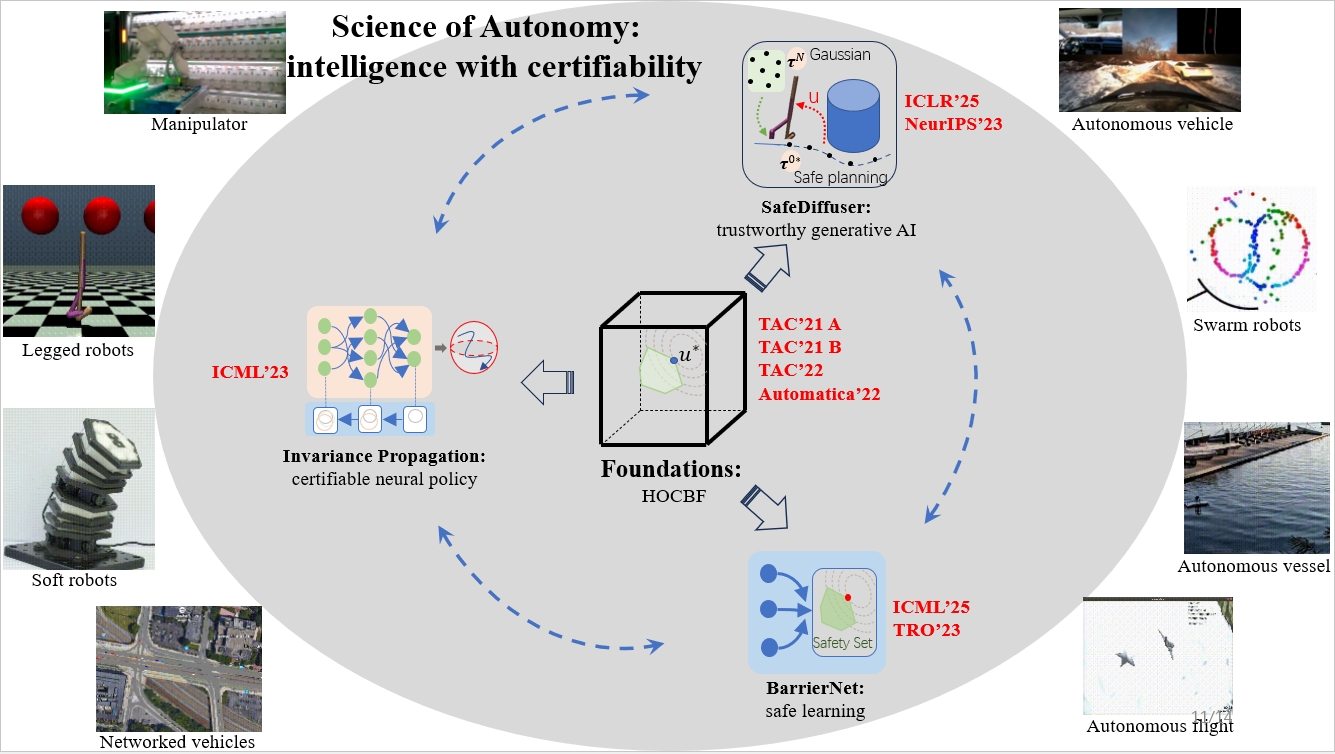

I am an assistant professor leading the Safe Autonomy and Intelligence / AI Lab (SAIL) in the robotics engineering department at WPI, and a research affiliate with MIT CSAIL. I was a Postdoc Associate at the MIT CSAIL (2021-2025) advised by Prof. Daniela Rus. I got my Ph.D. degree in Systems Engineering in 2021 at Boston University advised by Prof. Christos G. Cassandras and Prof. Calin Belta.